Designing a Scalable API Rate Limiter in nodejs Application

Designing a Scalable API Rate Limiter in nodejs Application

In this article, we will see how to build a scalable rate limiter for API in nodejs Application. Scalable API Rate Limiter in Node.js Application.

TypeScript for React developers in 2020

Scenario 1:

Let’s say, you are building a Public API Service where user can access the Service using that API. Meanwhile, you need to protect from DDOS Attack of that Public API Service.

Scenario 2:

Consider that we built a Product. we need to provide free trial to the users to access the Product service.

Solution:

On both the scenarios, Rate limiting Algorithm is a way to solve the Problems.

Rate limiting Algorithm :

Firstly, Rate limiting algorithm is a way to limit the access to API’s. For example, Let’s say that an user request a API in the rate of 100 Requests/Second. it might cause of the problem of server overload.

To avoid this problem, we can limit the access to the API’s. Like, 20 Requests/minute for an user.

Different Rate Limit Algorithm:

Let’s see type of rate limiting algorithms in software application development:

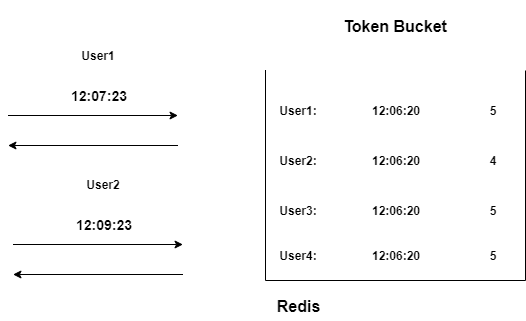

Token Bucket:

it stores the maximum number of token it can provides for a requests per minute. For Example, For an user, API Rate limiter sets 5 tokens per minute. so, user can send maximum 5 requests to server per minutes. After that, server drops the request.

Mainly,Redis is used to store the information for fast access of the request data.

How it works

Let’s say User1 sends request to server. server checks whether the request time and previous request time is greater than a minute. if it is less than a minute, it will check the token remaining for the specified user.

If it is Zero, Server drops the request.

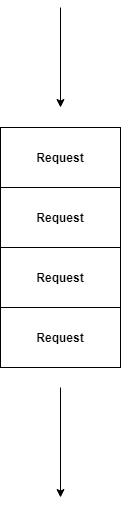

Leaky Bucket

It is a Queue which takes request in First in First Out(FIFO) way.

Once, Queue is filled. server drops the upcoming request until the queue has space to take more request.

For Example, Server gets request 1,2,3 and 4. Based on the Queue size. it takes in the request. consider the size of queue as 4. it will take requests 1,2,3 and 4.

After that, server gets request 5. it will drop it.

Fixed window Counter

it increments the request counter of an user for a particular time. if counter crosses a threshold. server drops the request. it uses redis to store the request information.

For example, Server gets the request from an user. if the user request info is present in redis and request time is less than the time of previous request, it will increment the counter.

Once, the threshold is reached. server drops the upcoming request for a specified time.

Cons

Let’s say that server gets lots of request at 55th second of a minute. this won’t work as expected

Sliding Logs

it stores the logs of each request with a timestamp in redis or in memory. For each request, it will check the count of logs available for an user for a minute.

further, if the count is more than the threshold, server drops the upcoming requests.

On the other hand, there are few disadvantages with this approach. let’s say if application receives million request, maintaining log for each request in memory is expensive.

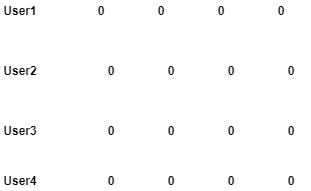

Sliding window counter

This approach is somewhat similar to sliding logs. Only difference here is, Instead of storing all the logs,we store by grouping user request data based on timestamp.

For example, Once server receives a request by an user. we check the memory for the request timestamp. if it is available, we increment the counter of it. if it is not available, we insert it as new record.

In that way, we don’t need to store each request as a separate entry , we can group them by timestamp and maintain a counter for it.

Implmenting Sliding window Counter in Node.js App

Complete source code can be found here

Prerequisites

create a directory and initialize package.json using the following command

npm init --yesAfter that, Install Express and redis for the application using the following command

npm i express redis momentRedis Client is used to connect with redis server. moment is used for storing the request timestamp.

Firstly, create a file server.js and add the following code.

const express = require("express");

const rateLimiter = require("./slidingWindowCounter");

const app = express();

const router = express.Router();

router.get("/", (req, res) => {

res.send("<h1>API response</h1>");

});

app.use(rateLimiter);

app.use("/api", router);

app.listen(5000, () => {

console.log("server is running on port 5000");

});Secondly, create a file slidingWindowCounter.js and add the following code.

const redis = require("redis");

const moment = require("moment");

const redisClient = redis.createClient();

module.exports = (req, res, next) => {

redisClient.exists(req.headers.user, (err, reply) => {

if (err) {

console.log("problem with redis");

system.exit(0);

}

if (reply === 1) {

redisClient.get(req.headers.user, (err, redisResponse) => {

let data = JSON.parse(redisResponse);

let currentTime = moment().unix();

let lessThanMinuteAgo = moment().subtract(1, "minute").unix();

let RequestCountPerMinutes = data.filter((item) => {

return item.requestTime > lessThanMinuteAgo;

});

let thresHold = 0;

RequestCountPerMinutes.forEach((item) => {

thresHold = thresHold + item.counter;

});

if (thresHold >= 5) {

return res.json({ error: 1, message: "throttle limit exceeded" });

} else {

let isFound = false;

data.forEach((element) => {

if (element.requestTime) {

isFound = true;

element.counter++;

}

});

if (!isFound) {

data.push({

requestTime: currentTime,

counter: 1,

});

}

redisClient.set(req.headers.user, JSON.stringify(data));

next();

}

});

} else {

let data = [];

let requestData = {

requestTime: moment().unix(),

counter: 1,

};

data.push(requestData);

redisClient.set(req.headers.user, JSON.stringify(data));

next();

}

});

};- it checks if the user exists in redis memory, if exists, process further. if not, insert the user details with counter value and request timestamp.

- If user already exists, it will check if count of request within the last minute exceeds the threshold. if it exceeds, server drops the request.

- If it does not exceeds, it will increment the counter if any timestamp matches. else, it will insert .

Scalable API Rate Limiter in nodejs , Complete Source Code can be found here

Demo : https://youtu.be/qlQ5XSDFe9c